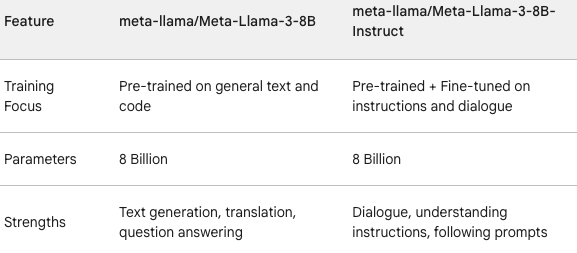

meta-llama/Meta-Llama-3-8B:

This is the base model from the Meta Llama 3 family with 8 billion parameters. It’s a pre-trained LLM trained on a massive dataset of text and code. This model excels in various tasks like text generation, translation, and question answering. However, it might not be specifically optimized for tasks requiring a strong understanding of instructions or dialogue.

meta-llama/Meta-Llama-3-8B-Instruct:

This is an instruction-tuned version of the meta-llama/Meta-Llama-3-8B model. It’s based on the same 8 billion parameter architecture but has been further fine-tuned on a dataset specifically designed for following instructions and performing well in dialogue scenarios. This makes it more adept at understanding and responding to prompts or questions that require following instructions or engaging in conversation.

Choosing the Right Model: The best choice depends on your specific needs:

- General Text Processing: If you need a versatile LLM for tasks like text generation, translation, or question answering,

meta-llama/Meta-Llama-3-8Bmight be sufficient. - Dialogue and Instruction Following: If your focus is on building chatbots, virtual assistants, or applications that require understanding and responding to instructions,

meta-llama/Meta-Llama-3-8B-Instructis likely a better option due to its optimized training for those tasks.

There is also an uncensored version of llama2 already available and now the llama3 uncensored follows the same route with an uncensored version. Let me know your thoughts in the comments below.