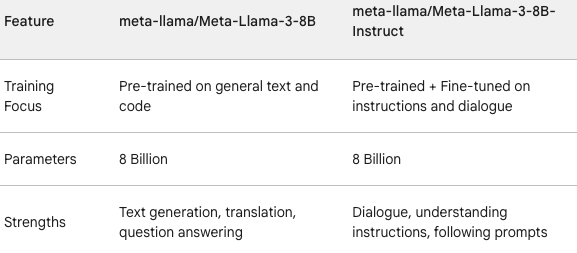

Large Language Models (LLMs) have revolutionised natural language processing, demonstrating an uncanny ability to generate human-quality text, translate languages, write different kinds of creative content, and answer your questions in an informative way. But behind this seemingly magical performance lies a sophisticated mechanism: masked self-attention. This blog post deep-dives into this crucial component, explaining how it works, why it’s essential, and its impact on the capabilities of LLMs.