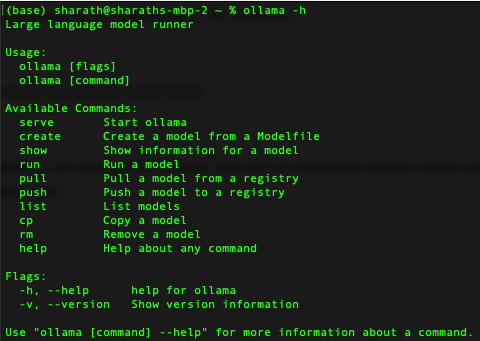

Ollama is a platform designed to make running large language models (LLMs) on your computer easier. It removes the complexity of managing model weights, configurations, and server setups. Ollama provides an easy-to-use CLI which can speed up the process of downloading LLMs (depending on your internet connection) in less than 5 minutes :)

Let’s jump into getting Ollama setup in your local in 5 easy steps.

1. First Download & Install Ollama:

– Visit the Ollama website: https://ollama.com/

– Download the installer based on your operating system (Mac, Windows, Linux).

– Depending on the OS you’re on (windows/mac/linux), run the downloaded installer and follow the on-screen instructions.

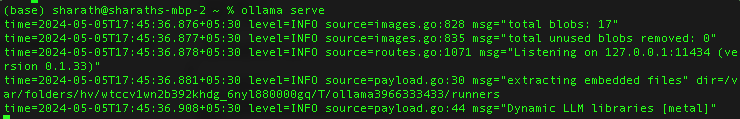

2. Start the Ollama Server:

– Open a terminal or command prompt.

– Navigate to the Ollama installation directory.

– Run ollama serve to start the local server.

ollama serve

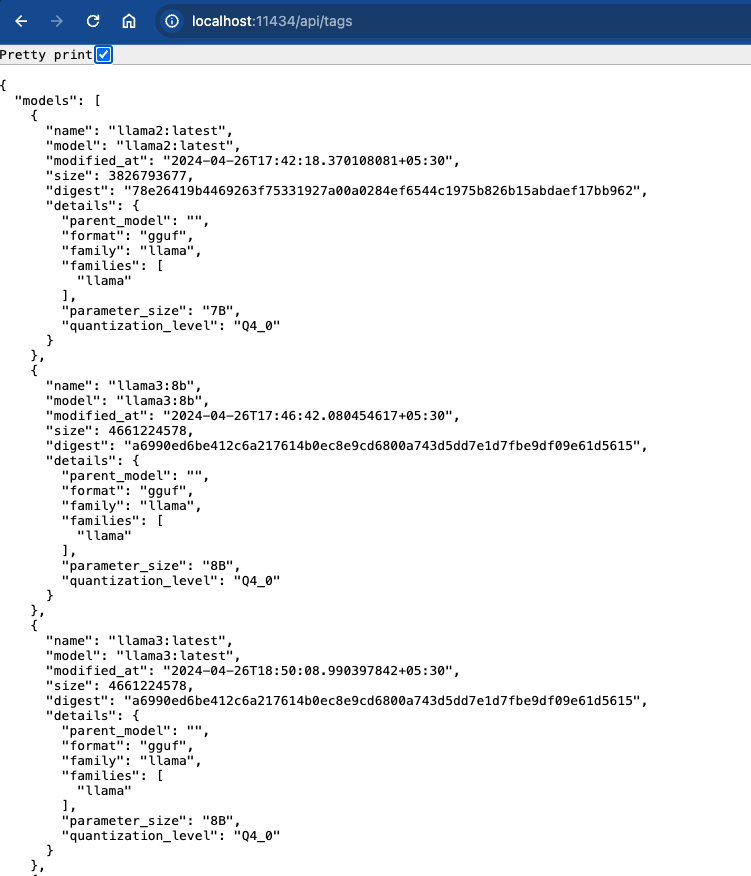

3. Verify Ollama:

Open a web browser and go to http://localhost:11434/api/tags.

If successful, you should see a list of available LLM models.

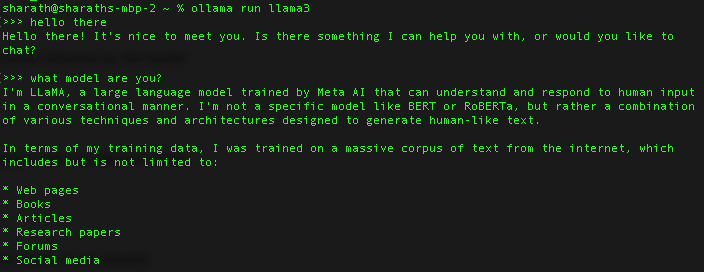

4. Run an LLM:

Use the ollama run command followed by the model name.

Ollama will download the model (if not already downloaded) and start an interactive session where you can provide prompts and receive LLM responses.

$ – ollama run <model_name>

Ollama provides lots of features along with inbuilt APIs. If you’re interested in looking up API details, here’s the link to the Ollama API documentations. Below are some key features of using an LLM wrapper like Ollama for running locally.

Key Features:

- Local Execution: Ollama allows you to run powerful LLMs directly on your machine, eliminating reliance on cloud services.

- Supported Models: It offers a library of open-source LLMs, including Meta’s Llama models and others like Baklava and LLaVA.

- Ease of Use: Ollama simplifies the process with a single model package format and optimized configurations for efficient local execution.

- Multimodal Capabilities: Newer versions of Ollama support multimodal LLMs that can process both text and image data, expanding potential applications.

Benefits:

- Cost-Effective: Running LLMs locally can be more affordable than relying on cloud resources.

- Privacy: Local execution offers greater control over data privacy compared to cloud-based services.

- Customization: Ollama allows you to experiment with different models and configurations for tailored results.

For non-commercial purposes, there are hundreds of apps that are coming up using Ollama. Let me know how you’re planning on using the same in the comments below.